XR and the Metaverse: how to achieve interoperability, wow factor and mass adoption

By Guido Meardi, CEO and co-founder V-Nova; Laurent Scallie, CEO and founder Redpill VR; Tristan Salome CEO and founder PresenZ; June 2022

As global giants like Meta, NVIDIA, Apple, Microsoft and even HSBC make moves into the space, the metaverse is on the lips of numerous technologists. Anticipation is growing around how the new virtual space will impact our lives, with at-times uber-optimistic media coverage and even tabloid curiosities such as the first metaverse wedding in India. Fun fact, it’s not even the first one, since one took place at Cybermind in San Francisco in 1994, in VR.

Weed off the inevitable hype and make no mistake: in some form or fashion, the Metaverse and Extended Reality (XR) are here to stay and become the next-generation Internet. It pays off to understand them better, along with the corresponding requirements and technical implications.

With affordable XR devices becoming fit for purpose, it is becoming clear that the last bottleneck to overcome is the huge volume of data required to enable immersive experiences at suitable quality, for instance via “split computing” or “cloud rendering”, essential to both technical feasibility and inter-device interoperability.

There are two distinct sets of technical challenges, related to what happens before 3D rendering and after 3D rendering: overcoming them is a top priority for everyone wishing to make the most of the Metaverse opportunity.

The good news is that solutions to those challenges do exist.

Split computing

In computer graphics, the process of dividing computation into two or more devices, with 3D rendering performed on a device (nearby or remote) different from the display device.

Rendering may also be further split across multiple resources ("hybrid rendering"). The resulting rendered frames are then streamed (“cast”) to the display device.

This blog in a nutshell

In this blog post we went for depth. After all, if it’s too much you can just stop reading or skim-read it.

In the full text you will find the following:

- What are the Metaverse and XR, beyond the hype.

- Why it is happening now, as opposed to ten years ago or five years from now.

- How much money it is already moving, let alone future projections.

- The three key user requirements for mass-market adoption: lighter devices, interoperability, no compromise on quality.

- Moore’s Law and Koomey’s Law cannot bridge the graphics processing power gap of XR devices vs. gaming hardware. Consequently, high-quality interoperable XR applications will need to operate via split computing and ultra-low-latency video casting, with large-scale delivery supported by hybrid rendering and Content Rendering Networks (CRNs, the metaverse evolution of Content Delivery Networks).

- The data compression challenges of delivering volumetric objects to rendering devices and then casting high-quality ultra-low-latency video to XR displays will require low-complexity multi-layer data coding methods, which can make efficient use of available resources and are particularly well-suited to 3D rendering engines.

- Two recently standardized low-complexity multi-layer codecs – MPEG-5 LCEVC and SMPTE VC-6 – have what it takes to enable high-quality Metaverse/XR applications within realistic constraints. LCEVC enhances any video codec (e.g., h.264, HEVC, AV1, VVC) to enable ultra-low-latency delivery high-quality XR video (or even video + depth) within strict wireless constraints (<30 Mbps), while also reducing latency jitter thanks to the possibility to drop top-layer packets on the fly in case of sudden drops of network bandwidth. When applied to HEVC-based or VVC-based MPEG Immersive Video (MIV), LCEVC similarly enables higher qualities with lower overall processing load. For non-video volumetric data sets, application of the VC-6 toolkit to point cloud compression enabled mass distribution of photorealistic 6DoF volumetric video at never-seen-before qualities, with excellent feedback from both end users and Hollywood Studios.

- Since XR rendering and video casting are by definition 1:1 operations, power usage will grow linearly with users, differently from broadcasting and video streaming. Sustainability and energy consumption are going to be important considerations, adding one more brick in building the case for CRNs supported by low-complexity multi-layered coding.

- Many Big Tech companies are investing tens of billions of dollars (each) to suitably position themselves within the Metaverse Gold Rush. As we have seen with the Web, whilst some titans aptly surf the new tidal wave, others will falter, and new ones may grow. The ability to suitably tackle the data volume challenges, promptly leveraging on the approaches and standards that are available, will be one of the key factors driving the speed with which the XR meta-universe is brought to the masses, as well as which tech titans will end up on top of the hill.

What exactly is the Metaverse?

The Metaverse promises to (further) break down geographical barriers by providing new virtual degrees of freedom for work, play, travel, and social interactions. But what is it, in practice, and why now?

John Riccitiello, CEO of Unity, recently gave a sensible definition of Metaverse (for “meta-universe"): “it is the next generation of the Internet, always real time, mostly 3D, mostly interactive, mostly social and mostly persistent”. In practice, it’s a new type of Internet user interface to interact with other parties and access data as a seamless and intuitive augmentation with the actual world in front of us, inspired by the online 3D worlds already familiar to users of multiplayer video games. It aims to replicate the 6 Degrees of Freedom (6DoF) and inherent depth witnessed in the real world, as opposed to the flat 2D interfaces we use to browse online. Often mentioned interchangeably with the term “eXtended Reality” (XR, combination of Virtual Reality and Augmented Reality), the Metaverse promises to be the next step-change in the evolution of networked computing after the introduction of the World Wide Web back in the 1990s, when we went from relatively exoteric text-based interfaces to intuitive “browsing” of hypertexts with multimedia components.

This new paradigm (Metaverse, Cyberspace, 6DoF, M-thing, Web 3.0, ... pick your favorite moniker) is different and powerful in the way that it will replicate the inherent depth and intuitiveness of the real world, as opposed to the flat hypertextual interfaces that we use today. By so doing, it will further enhance the participative, social and decentralized elements that we have started experiencing since 2004 with so-called Web 2.0.

To understand what’s necessary for this new 3D archetype to become widespread, it’s useful to review how the current 2D archetype came along. Since Tim Berners-Lee introduced HTML (Hyper Text Markup Language) in late 1990, almost all websites and software applications have adopted some form of hypertext user interface archetype, intended as unbounded two-dimensional pages with text, images, animations and videos, some of which functioning as hyperlinks to yet other hypertexts, creating an intricate global “web” of multimedia information. The ease of use of HTML and the availability of the necessary technical enablers to guarantee a responsive user experience – e.g., broadband connectivity, origin servers able to serve requests at high concurrency, Content Delivery Networks (distributed caching) and low-cost user devices with sufficient power to interpret HTML pages in real time – ultimately drove mass adoption of what is now the default way to work on digital data: a two-dimensional interface, enhanced digital metaphor of a sheet of paper. Many may remember that, before the dawn of the Web, the default way to work with computers in a network was limited to mono-dimensional command-line text interfaces.

Notably, the idea of hypertexts had been floating for decades before hitting mass adoption: they were theorized by Douglas Engelbart, inventor of the computer mouse, as early as in the 1950’s, but took grounds only in the 1990’s. Similarly, the Metaverse is all but a brand-new concept of the 2020’s. The concept of interacting with computers by being “immersed” in some sort of 3D cyberspace has been explored by popular cyberpunk books and films since the early 1980’s, the term “metaverse” itself was introduced in 1992 by the book Snow Crash, and a renowned attempt at commercializing a metaverse destination was made by Second Life fifteen years ago. It remained a compelling idea for early adopters up until key influencers like Matt Ball and Big Tech companies like… Meta started arguing that the Metaverse is finally ready for prime time, with technology enablers ripe to make quality of experience good enough for the early majority and large-scale adoption.

It’s important to highlight that every company with a web site or an app should somewhat think about this. The Metaverse will not be a single winner-takes-all destination, as imagined in the dystopian film “Ready Player One” or envisioned by The Sandbox or Second Life. Much like today’s World Wide Web(s) and app stores, it will be made by a diverse collection of digital destinations. According to a recent McKinsey survey, all Americans are ready to embrace the Metaverse, just with different levels of engagement. Millenials and Gen-Z respondents are expecting to spend a whopping 4.7 hours per day somewhat in it, for diverse activities such as shopping, education, health & fitness, live events, customer support, work collaboration, etc.

In fact, the very act of questioning whether or not the M-thing is happening is mostly a prerogative of Boomers or Gen-X people. Millenials seem quite convinced, while Gen-Z and Gen-α take it for granted and are kind of already in it when they play games. Regardless of opinions, brands that want to stay relevant to future generations must quickly come to terms with it. In ten years, you can safely bet that virtually every website will have some Metaverse features and that a large portion of the global advertising expenditure will happen in the Metaverse. Some destinations will be 100% digital, while others will fuse reality with digital, augmenting our real world with new monetizable features

In this big transition, likely to shift trillions of dollars in valuation over the coming years, companies driving the growth of the Metaverse should suitably consider all the technology components that will be critical to drive mass adoption of immersive interfaces, which is the point of this blog.

Why now?

From a user perspective, a Metaverse productivity suite and an e-commerce site, promise to be to the current Microsoft Office suite and to Amazon, what the 3D video games Doom and Wing Commander in the 1990s were to Pac-Man and Space Invaders in the 1980s: better visuals, immersion, and freedom to use a 3D space rather than being constrained to 2D.

Gamers have been playing for decades within 3D worlds with 6 Degrees of Freedom (6DoF), so one may rightfully ask how come we haven’t already been e-shopping in 3D and/or working on Excel spreadsheets in 6DoF 3D for the past twenty years? If GTA V was able to make us free to roam around a pretty good proxy of Southern California, how come Microsoft Office, Amazon, Instagram and SAP are all still so boringly flat?

In fact, transitioning non-gaming applications to 6DoF 3D has been hindered so far by a few technical barriers, which are now close to being surpassed.

Undoubtedly, the obvious barrier to immersive XR was so far represented by clunky VR headsets that could not meet the resolutions and frame rates necessary for a smooth experience. Someone may still remember trying a Virtuality headset in the early Nineties and feeling motion sick. Others may remember comments on form factors being uncool, or fears of “isolating” VR users in their digital worlds. Thanks in large part to Oculus, HTC and Microsoft, commercially available headsets are much lighter, allow for non-isolated Augmented Reality and finally work well enough from a visual standpoint, to the point that average consumers may find them appealing. Upcoming headsets soon to be released will further address some of the remaining pain-points for realism, such as projecting an image of the user’s face to reduce the sense of “physical separation” for people outside the headset, adding sensors for real-time eye-tracking and face-tracking for more realistic social interactions in the Metaverse, and including varifocal display technology to address the focus problem – which is technically called the “vergence-accomodation disconnect”, i.e., displays forcing you to focus about 1.5m away regardless of the depth of what you are looking at. All in all, we are rapidly getting to headsets that are good-enough for mass adoption.

However, suboptimal headsets were not the only barrier to the rise of the Metaverse. 6DoF 3D digital worlds do not necessarily require immersive XR, as demonstrated by 3D video games happily thriving on traditional flat screens for decades. Why haven’t we seen office productivity tools, e-commerce websites or other software applications following the steps of video games?

The other big barrier to non-gaming use of 3D worlds was so far the difficulty of integrating intuitive 6DoF controls with the peripheral input devices that we normally use on our laptops, tablets and mobiles. In fact, I can’t quite see myself working on a PowerPoint presentation with a gaming controller in my hands: I’d struggle to move in 6DoF like that while also typing and all.

Keyboards, mice, touch pads and touch screens are great, but none of them are particularly suited to 6DoF navigation of 3D environments. Excluding game controllers, the holy grail for taming intuitive 6DoF controls finally became mature in the latest handful of years: hailed by the commercial success of the Microsoft Kinect in 2010, gesture controls driven by inexpensive cameras and Infra-Red proximity sensors are now found in pretty much every device. If you have tried one of the latest VR headsets you probably know what I mean: that “wow moment” when you first wave your hands around you, stretch your fingers in various ways and see them in the digital world moving exactly like your real ones. Plus the possibility for haptic feedback if you hold XR controllers or wear XR bracelets. Plus the ability to still use your real-world keyboard when you need to do so.

As simple immersive-XR use-case example of what’s now possible with commercially available technology, imagine putting on a pair of AR eyeglasses and then seeing several large screens and 3D objects floating around you. Imagine using your bare hands to naturally move, zoom, reduce or manipulate them, while still seeing your real-world desk and keyboard in transparency, so that you can use it normally. Also imagine doing that while collaborating in real time with the 3D avatars of two colleagues, realistically rendered next to you. If you are a long-standing Tom Cruise fan, think “Minority Report” data access and manipulation (also sitting down, using more natural gestures, and still typing on a keyboard when it makes sense to do so) plus collaboration and social elements unthinkable twenty years ago.

Fig. 1 – With XR headsets, XR glasses or glass-free light-field displays with head and gesture tracking, immersive user interfaces with gesture controls are now a reality. Having personally tried all the above technologies for decades, I can confirm that they (finally) just work.

Importantly, as I have already mentioned, this type of immersive interfaces will not be limited to XR displays that we wear on our face. Even traditional flat-screen devices such as laptops, mobiles and large screens are being equipped with cameras and IR sensors capable of gesture detection and head tracking. Already today, for just a few thousand dollars one can buy a glass-free auto-stereoscopic 8K screen that tracks head and eye movements so as to show a volumetric scene that we can look at as if it was coming out of the screen, with the screen effectively behaving like a window to a 6DoF 3D digital world.

Gesture controls are here to stay and will drive a very rapid evolution of User Interfaces, adding new degrees of freedom to our digital life: they are to the Metaverse what mice and trackpads were to the Web. Depending on the application, immersive 3D interfaces with integrated gesture control will come as evolution as much as revolution, similarly to what we have seen in previous decades with hypertexts and touch screen interfaces. Hypertexts and traditional (“rectilinear”) video feeds will also continue to exist in the Metaverse: they will just be displayed in the context of an immersive user interface and manipulated intuitively in 6DoF.

Show me the money

Although the Metaverse may seem like a future concern, it isn’t. In fact, it is already moving substantial money today.

McKinsey estimated the global Metaverse potential at $5 trillion. According to Ernst & Young, during the first six months of 2022 there were over $140 billion of M&A transactions related to the Metaverse, and Metaverse top-line revenues went from practically zero pre-Covid to a whopping $324 billion in 2021. Of course we’re old enough to know the trick of re-classifying existing revenue streams within a new trend, but that figure does include wholly new revenue streams related to immersive virtual experiences, including $120 billion (growing from $6 billion in 2020) of purchases within persistent virtual ecosystems and even $24 billion (from $1 billion in 2020) of decentralized transactions made by selling Non-Fungible Tokens (NFTs). Federico Bonelli, leader of Ernst & Young’s Retail and Luxury Practice in Europe, further commented that “300 million people may already be considered metaverse active users, since they own at least one avatar in at least one virtual world and/or own at least one collectible NFT. Importantly, these people spend three times as many hours per day in social virtual worlds than they spend on traditional social networks”. We may well guess that those are the kind of stats Mark Zuckerberg is looking at.

Non Fungible Tokens (NFTs)

NFTs are assets that carry a unique digital identity and can be traded between users on a public blockchain. Although NFTs tend to be associated with artwork, they actually represent much more, and are often a component of the Metaverse strategies of leading global companies.

NFTs allow for unique non-replicable assets within virtual worlds, and as such also allow to connect events and transactions in the virtual world with exclusive events and transactions in the real world.

Actually, why not yet?

There is demand for more intuitive and immersive digital applications, and everything I’ve just described is already technically feasible today with relatively inexpensive devices (e.g., a Meta Quest 2 costs 249 USD). Many people have started buying those devices: there will soon be over 20 million households equipped with at least one of the latest-gen headsets. Lighter AR glasses like the Microsoft Hololens 2 or the Magic Leap 2 are already capable of doing augmented reality as described above, with a new and improved generation expected soon, spurred by other Big Tech heavyweights entering the arena as well as innovative start-ups like Ostendo pushing the envelope of what’s possible. Glass-free autostereoscopic displays that just work – like those of SeeCubic or Dimenco – are getting close to the cost of traditional displays. Pretty much any flat-screen device is being equipped with cameras and IR sensors capable of gesture tracking and head tracking.

If there is consumer demand for more intuitive and immersive digital applications, and all the technology we’ve just described is commercially available today and affordable, why aren’t we all on the Metaverse already? What are the remaining barriers?

Key user requirements for mass market adoption

In adoption-cycle terminology, we have gone from innovators (pre-2020) to early adopters (2020-2022), and new compelling XR experiences are emerging every day. Is it all working well enough and seamlessly enough to also entice the proverbial “early majority”, which inevitably means also non-gaming use cases?

Mass adoption requires to face three key user requirements:

- XR devices must be small and light, ideally as light as a pair of eye-glasses and with a maximum peak power consumption of 1-2 Watts. That leaves room for very little electronics and battery, which are not suitable to crunch high-quality 3D rendering in real time. Small form-factors are a prerequisite to scale, since people cannot so-to-speak “wear a gaming laptop on their face” (or even a powerful mobile phone) for several hours a day.

- Metaverse applications must be as interoperable as web pages, meaning that very different viewing devices (including both XR headsets/smart-glasses and more traditional TVs, mobile phones, tablets or laptops) with very diverse computational capacities must be able to access them. Interoperability is obviously important to service providers, to ensure the greatest possible user base for their services.

- Quality of experience is non-negotiable: end-users expect visually stunning, realistic, smooth and immersive experiences. This is of little surprise when video gamers now take for granted that the average football game allows them to see the beads of sweat dripping from the brow of their virtual footballers. While traditional 2D user interfaces may tolerate some imperfections, the point of the Metaverse is precisely the illusion of “presence”, which necessarily requires top-notch audio-visual quality, high quality 3D objects, realistic lighting, high resolutions, high frame rates and low-latency real time. Sketchy objects and sluggish frame rates will not cut it, and may even make users feel (motion) sick. If you were lured into participating in a metaverse “dance party” and then experienced low-quality visuals evoking memories of the Atari 2600’s capabilities, you may be curious for a while, but would be unlikely to repeat the experience. If instead you participated in a metaverse concert like these ones – with best-in-class immersive sound and visuals – you may indeed enjoy the experience and repeat it regularly. Unsurprisingly, the previously mentioned McKinsey analysis demonstrated high correlation between realism of the experience and frequency of use, pushing companies to build more realistic experiences.

Fig. 2 – Like web pages and apps, Metaverse destinations will have to be as interoperable as possible with XR devices of very different power, from lightweight XR glasses with peak power consumption around 1 Watt to heavier headsets consuming 5-6 Watts and featuring graphics capabilities on par with tier-1 mobile phones (still far from those of game consoles), all the way up to PC-VR set ups with graphics capabilities superior to game consoles.

Fig. 3 – End users are used to experiencing great 3D graphics in video games. They expect nothing less from Metaverse destinations.

The first two requirements call for seamless “split computing”, meaning that most computations – and especially 3D rendering – must be performed on another device, possibly even in the cloud, so that when needed you can time-share more powerful GPUs than you would otherwise be able to equip with. The resulting rendered graphics frames must be streamed to the device as high-resolution high-framerate ultra-low-latency stereoscopic video. As we will see in the next section, the constraints associated to XR casting are pretty much the worst possible nightmare for video coding, especially when wireless connectivity (wi-fi or 5G) is involved. Luckily, we will also see that there is a standard solution that makes it possible within realistic constraints.

Aside from interoperability with any type of user device, interoperability also calls for proper interplay of tools and platforms at application level, on the server side. The targeted ramp-up of high-quality Metaverse destinations requires low technical friction for creation and distribution. Going back to the example above of metaverse concerts, the complexity of the many integrated technologies to deliver a high-fidelity multi-user experience at scale and cross-platform is substantial. End-to-end platforms like the one created by Redpill VR are thus critical for the onboarding of a large content creator pool, and they will have to suitably bridge/interoperate with the platforms that Meta, Microsoft, Apple, NVIDIA and others are creating. For those platforms, initiatives such as Khronos' OpenXR and the Metaverse Standards Forum will be critical to limit fragmentation and ensure cross-platform application interfaces with high-performance access directly into diverse XR device runtimes.

The third requirement implies the dawn of many new types of large data sets on top of traditional audio, images and video (90+% of current Internet traffic) that will need to be efficiently exchanged within the metaverse. Examples will include immersive audio, point clouds of various nature, polygon meshes, textures, stereoscopic video, video + depth maps for reprojections, etc. The critical difference between Metaverse destinations and video games is that volumetric objects may be more difficult to pre-load and will have to rely on real-time access: much more frequently than for gaming, we will have to somewhat stream those big 3D assets, in real time.

Separating rendering from display

Accessing the Metaverse will NOT exclusively require uber-immersive XR headsets. XR will bring together headsets (over 23 million by 2023 according to IDC), lighter XR viewers the size of eye-glasses and glass-free auto-stereoscopic displays with the billions of more conventional mobile phones, tablets, PCs and TVs. Traditional flat screens will display virtual destinations similarly to what is done today with 3D games.

There is a catch. To experience realistic 3D graphics, hard-core gamers equip themselves with enough graphics horsepower (read “latest-gen discrete GPU with active cooling”), while the typical mass-market XR user may just have a headset, equipped with 50x less graphics power than the typical gaming PC, and a work laptop with iGPU, equipped with similarly underwhelming graphics power .

Said differently, since users will not accept “wearing a Playstation 5 on their face” and we can’t assume that everyone is willing to buy a gaming PC, it is savvy to design Metaverse experiences to the minimum common denominator in compute and power.

That minimum common denominator – lightweight XR devices consuming 1 Watt - will not be anywhere near capable of performing real-time decoding of photorealistic volumetric objects AND high-framerate 3D rendering at stereoscopic 4K resolution. The laws of physics and silicon technology don’t leave space for hope: latest-gen gaming GPUs are bulky and use between 150 and 300 Watts of power to perform those tasks. Consider that your average mobile phone starts heating up when it voltages up to consume more than 4 Watts. Add the weight of cooling fans and batteries, and you get why I joked about wearing a PS5 on our face. You’re then left with mobile GPUs that can’t match the level of rendering quality that end users are expecting: you can render a simple game like Beat Saber, but you can’t possibly immerse a user in a photorealistic 6DoF experience. Plus, headsets with the power of a tier-1 mobile phone are still too bulky for average users to accept wearing them multiple hours per day. The problem is real.

So lightweight devices have a >50x graphics power gap vs the visual quality that hard-core gamers are used to experiencing. If you are hoping for this gap to be filled by faster chips and Moore’s law, I sadly bear bad news. If you want more details, check out the dedicated box in this section, otherwise just trust me that Moore’s Law and Koomey’s Law will still be with us for a little while longer, but silicon technology on the horizon will not provide a 50x processing power efficiency gain in the next decade (and possibly ever).

Processing power disparities are also relevant to interoperability, key user requirement number two. If we want Metaverse applications that are indeed “the new Web”, we must make sure that any XR device can suitably play them, with seamless interoperability. How can we efficiently cope with end user devices that may have quite literally orders of magnitude of difference in available graphics rendering power?

With further silicon technology improvements acting as placebo, the solution inevitably lies in the good old mainframe and client-server archetypes, with their modern rebranding as split computing. In the Eighties, a single mainframe computer was able to control real-time user interfaces for tens or even hundreds of user terminals. This same concept is set to come back and enable immersive digital worlds with seamless interoperability: for simple tasks the mainframe may be the mobile phones that you carry in your pockets, while as soon as you require serious rendering power you may seamlessly tap into the GPU resources of your nearest available shared computing node. It may please all fans of Isaac Asimov that, indeed, he got also that aspect right.

Performing rendering outside of the XR device also means streaming volumetric objects to the rendering device, which is more likely to be well connected to the Internet, especially if in a data center. The XR device (wirelessly connected to the Internet via Wi-fi or 5G, often <50 Mbps due to distance from the repeater, obstacles, concurrency, and packet loss from interferences) will just require enough connectivity to handle a video stream.

It’s inevitable: for 3D-graphics-hungry Metaverse applications, rendering and display must be separated. A rendering device able to both crank and dissipate enough power – whether a handheld device, a nearby PC, or some powerful server somewhere “in the cloud” – will receive the compressed virtual objects, decode them, run the application and render the viewport i.e., what the end user is watching at any one time. Even rendering itself may actually be separated across separate stages on different machines, a process that NVIDIA in a popular article called “Hybrid Cloud Rendering”. For instance, a more powerful GPU could compute lighting information – possibly even using costly algorithms like ray tracing or path tracing, requiring >1,000x more computing power than typical game-engine lighting –, while a less powerful nearby GPU may compute the final rendering. In other cases, for complex 3D scenes with many users, costly lighting calculations may be “pooled” for multiple users, generating intermediate volumetric data that is then transmitted to multiple local edge nodes that render the final viewport for each single user. Whether performing traditional all-in-one rendering or hybrid cloud rendering, rendering servers may be thus organized in a dynamic hierarchy, similarly to the way in which caching servers of Content Delivery Networks enable Web content delivery at scale: we call these upcoming Metaverse infrastructures “Content Rendering Networks” (CRNs), since they are a natural (r)evolution of CDNs. The resulting rendered viewport will then be cast via ultra-low-latency video streaming to the lightweight XR display device. As such, the XR device will just have to manage its sensors, process/send data of what the user is doing and decode the received high-framerate high-resolution video.

That is indeed doable at photorealistic quality within 1 Watt, especially by using a low-complexity video coding method to fit within bandwidth and processing constraints.

Moore’s Law and Koomey’s Law will not fit a PS5 into a pair of XR glasses

Most modern mobile SoCs use 5 nanometer silicon technology in terms of transistor size, with long-term R&D suggesting that 2 nanometers may be the physical limit of silicon. One nanometer means about 10 electrons, so we’re getting to the scale where the quantum nature of electrons makes them jump silicon gates regardless of applied voltage. In addition, while miniaturizing transistors further from 5 nm to 2 nm, transistor density will increase, but power drain per transistor is unlikely to decrease much further due to quantum tunnelling. We have already seen that with the transition from 7 nm to 5 nm, which according to TSMC increased transistor density by 80%, but improved computational performance per Watt by a mere 15%. This effect is also known as Koomey’s Law, from Stanford professor Jonathan Koomey.

Differently from Moore’s Law, which tracks the evolution of peak computing performance, Koomey’s Law tracks the evolution of computing performance per Watt. Sadly, Koomey’s Law measured a stark decrease in our ability to perform more computations for a same unit of power: from the 100x improvement per decade of the 1970’s (doubling every 18 months), in the first two decades of the 21st century we have achieved ca. 15x per decade (doubling every 2.6 years), and now – for the above quantum physics reasons – we are plateauing even further.

Of course the above is true for traditional computing based on silicon-gate transistor technology, and things may change in the future by means of new disruptive technologies such as Quantum computing or whatever else. But none of that is credibly on the horizon for mass market adoption in consumer devices within the next decade.

The split computing challenges to make XR feasible at scale

The importance of suitably handling huge volumes of data can’t be understated when it comes to large-scale adoption of XR. Data compression and manipulation that fits the quality, bandwidth, processing and latency constraints of solid latest-generation network connectivity is the name of the Metaverse game, with three main technical challenges to overcome:

- Suitable compression and streaming of volumetric objects to the rendering device, as well as across rendering devices, requiring new coding approaches.

Efficient compression/decompression of 3D objects must be undertaken with suitable lossy coding technologies to ensure that point clouds, textures and meshes can be effectively streamed. For hybrid rendering use cases, intermediate byproducts will have to be efficiently transmitted across different nodes of a Content Rendering Network. Previously, monolithic once-in-a-while gaming-world downloads have mostly been losslessly coded (we could say “zipped”). Existing challenges with 2D-interface virtual-world services such as Google Maps will also apply here, as the virtual environments need to be effectively segmented and layered to ensure that – with a Google Maps analogy – the map for the whole planet Earth doesn’t need to be transmitted and decoded for a localized search in Downtown Manhattan. Flexible software-based layered coding methods suitable for efficient massively parallel execution will be ideal. Notice that a working example of that was already published with this photorealistic 6DoF experience available in the Steam Store, awarded with a Lumiere Award by Hollywood’s Advanced Imaging Society for its high-throughput massively parallel point-cloud compression technology. Interestingly, this format is also a good example candidate of intermediate compressed data format that may be used to effectively implement hybrid cloud rendering and share costly lighting computations for multiple users in the context of a CRN, as described earlier.

Fig. 4 – Example of photorealistic 6DoF volumetric video – previously requiring special high-performance hardware – delivered at scale and playable via standard PC-VR devices thanks to highly efficient point cloud compression.

- Ultra-low-latency video encoding at 4K 72 fps and beyond, but also at a bandwidth lower than 30-50 Mbps to cope with realistic Wi-Fi / 5G sustained rates.

For the experience not to be “wobbly”, video casting latency must be low and consistent. Some latency can be managed by rendering the viewport according to a forecast of where the user will be watching after the estimated system lag and then performing a last-millisecond reprojection according to where the user is effectively watching. But we are still talking about 20-30 milliseconds: at those ultra-low-latencies, many of the latest compression efficiency tools cannot be used, which inflates the bandwidth required to transmit a given quality. At the same time, wireless delivery means strict bandwidth constraints (<50 Mbps) before packet loss starts generating unsustainable latency jitter. Aside from choosing a suitable protocol (if possible with some degree of forward error correction), it is particularly useful to pair up the most efficient video codecs available with low-complexity compression enhancement methods able to reduce bitrate and produce layers of data that can be discarded on the fly in case of sudden congestion without compromising decoding of the base layer video, therefore reducing the risk of latency jitter. - Strong network backbone/CDNs/wireless in place between the rendering and XR display device, and sufficient cloud rendering resources available (what we called "CRNs" previously).

With split computing, the non-negotiable quality of experience is obviously at risk if the network and the shared resources are not robust. Telco operators, cloud providers and CDNs will have to work extra hours to make sure that as many people as possible find themselves in the condition highlighted in point 2 above, where a user has access to a powerful GPU server somewhere within a few milliseconds of network ping and an end-to-end reliable connection of at least 30-50Mbps is available. Currently less than 10% of the population of developed countries subscribed to FTTH-grade connectivity and installed latest-generation Wi-Fi routers. In addition, the GPU server infrastructure available in the cloud would be capable of supporting just a fraction of them. Some hyper-scalers and silicon manufacturers have already started to scale up dedicated infrastructure and software solutions for cloud rendering, with other relevant stakeholders like telcos, rendering engine providers and CDNs likely in the process of considering their options. Some of them will strengthen their market position by either putting in place or integrating into the necessary tiered CRN infrastructures and protocols to support efficient split computing. Others may spend too much time pondering, or fail at growing the necessary capabilities organically from their current business, a typical case of “innovator’s dilemma”.

The case for low-complexity layered encoding

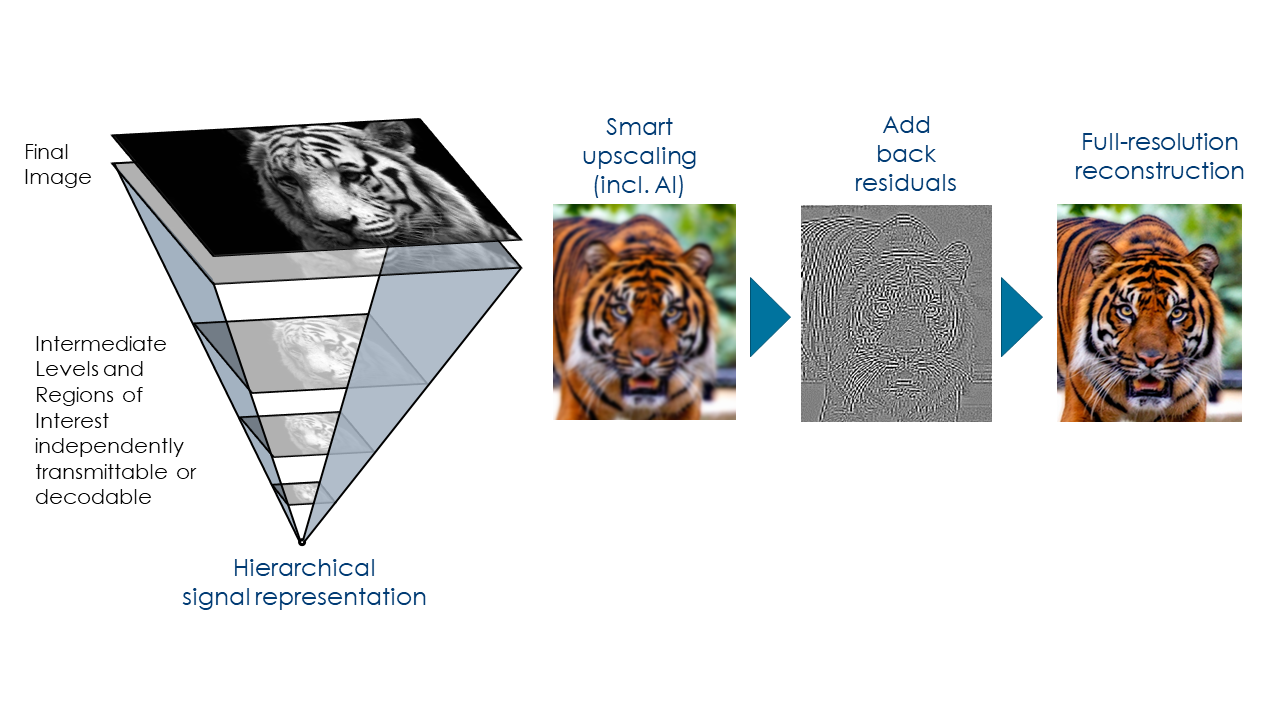

Layered encoding, i.e., structuring data in hierarchical layers that can be accessed and/or decoded as required (possibly even just in a region of interest) just makes intuitive sense: after all, it’s also the logical way we were taught to organize information in school and at work. Past attempts at making layered coding work had achieved limited traction due to layered codecs delivering less coding and processing efficiency, rather than more. Luckily, the latest attempts – e.g., in the form of the MPEG-5 LCEVC (Low Complexity Enhancement Video Coding) and SMPTE VC-6 standards – do work, and many of the benefits enabled by layered coding are finally viable.

LCEVC is ISO-MPEG’s new hybrid multi-layer coding enhancement standard. It is codec-agnostic in that it combines a lower-resolution base layer encoded with any traditional codec (e.g., h.264, HEVC, VP9, AV1 or VVC) with a layer of residual data that reconstructs the full resolution. The LCEVC coding tools are particularly suitable to efficiently compress details, from both a processing and a compression standpoint, while leveraging a traditional codec at a lower resolution effectively uses the hardware acceleration available for that codec and makes it more efficient.

LCEVC has already been selected as mandatory component for the upcoming SBTVD TV 3.0 broadcast and broadband specifications, and demonstrated relevant benefits for both traditional broadcast and streaming video. On top of that, LCEVC has been demonstrated to be a key enabler of ultra-low-latency XR streaming, producing benefits such as the following:

- Remarkable compression benefits for all available hardware codecs (h.264, HEVC or AV1). The LCEVC compression enhancement toolkit mostly operates in the spatial domain and its benefits are therefore very effective for ultra-low-latency coding whereby definition most temporal compression techniques of latest-gen codecs like AV1 or VVC (such as pyramids of bi-predicted references leveraging subsequent frames) can’t be employed. Aside from efficiency gains relative to non-enhanced encoding being material (typically above 30-40%), what matters is that with LCEVC the absolute bandwidth can fit within the constraints. LCEVC enables high-framerate stereoscopic 4K within 25-50 Mbps, critical to high-quality wireless casting and cloud XR streaming.

The difference in subjective quality around 30 Mbps often means the difference between unpleasant vs. fit for purpose, as illustrated by the example snapshots below comparing native NVENC (left) vs. LCEVC-enhanced NVENC (right), both at 25 Mbps in ultra-low-latency settings for both h.264 (top) and HEVC (bottom).Notably, reducing bandwidth also implies reducing transmission latency for a given quality.

Fig. 5 – With immersive interfaces, visual quality can drastically impact Quality of Experience, even more than with traditional OTT video streaming. Viewed in stereoscopic immersion, even relatively subtle impairments like the ones shown on the bottom left can quickly make the experience jarring.

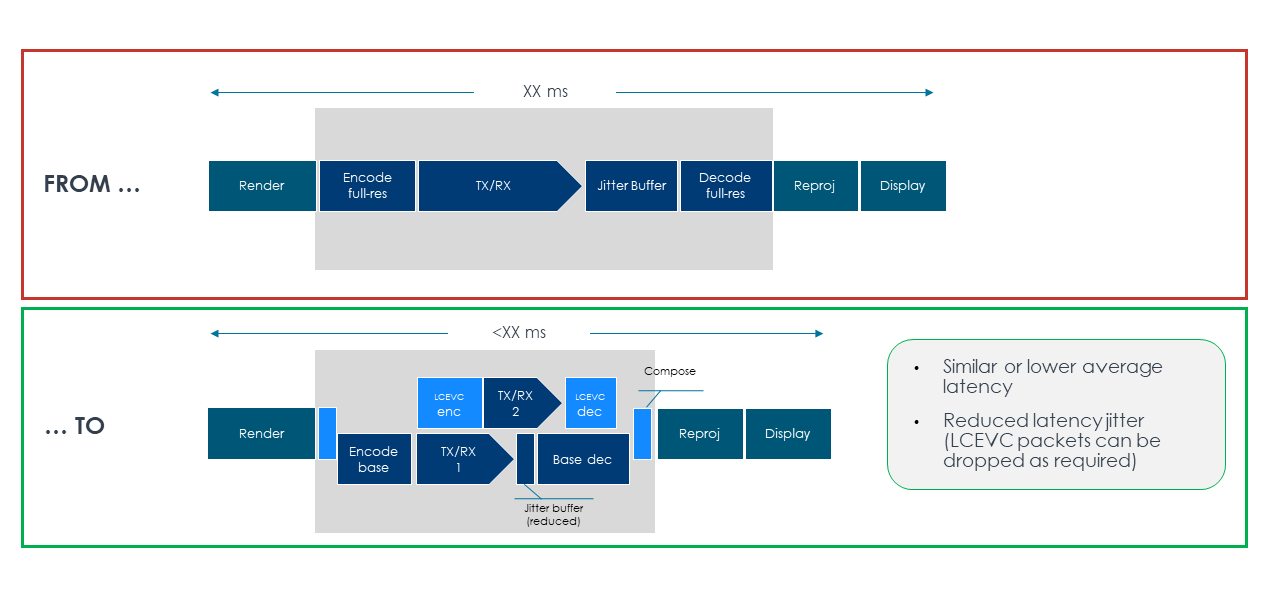

- Unique reduction of latency jitter thanks to the multi-layer structure inherent in LCEVC. The LCEVC NAL data can be transmitted in a separate lower-priority data channel and dropped on the fly – even in the middle of frame transmission, after encoding – in case of network congestion, without compromising the base layer and with minimal impact on visual quality (i.e., picture quality will look softer for a couple of frames, without evident space-correlated impairments). This allows to immediately react to the frequent oscillations and packet loss inherent to wireless transmission, avoiding piling up tens of milliseconds of latency jitter at every sudden drop of bandwidth. In addition, from an overall end-to-end system latency point of view, LCEVC enables to initiate transmission and decoding of the base layer while still encoding the enhancement layer, providing an additional degree of freedom to reduce coding latencies.

- Low processing complexity,allowing LCEVC to be immediately used via efficient heterogeneous processing by leveraging on general-purpose accelerated resources such as SIMD pipelines, shaders, scalers, graphic-overlay blocks and/or tensor cores, with limited resource overhead vs. native hardware encoding/decoding. In case of dedicated silicon acceleration, with small-footprint IP blocks already available, LCEVC further pushes the envelope of efficient encoding/decoding of extremely high resolutions, frame rates and bit depths, also reducing memory buffer requirements vs. traditional single-layer coding. In practice, efficient software processing enables immediate applicability of LCEVC with current headsets as well as improving the specs of existing SoCs for the benefit of upcoming devices, whilst the small-footprint silicon implementation is ideal for new chips able to support retina-resolutions on ultra-low-power smart-glasses.

- High bit-depth HDR.With Meta’s Starburst and Mirror Lake prototypes, Mark Zuckerberg recently illustrated the importance of HDR for realistic immersive experiences, and we know from previous analyses that with HDR the higher the bit-depth, the better. LCEVC leverages 16-bit accelerated pipeline, so it can encode HDR video at 12-bit or 14-bit without any processing and/or bitrate overhead, while still using the available 8-bit or 10-bit base layer codecs.

- Ability to send 14-bit depth maps along with the video, enabling more realistic depth-based reprojections and eye-tracking-based varifocal adjustments, for better overall visual experience. In the absence of depth maps, the reprojection executed immediately before display – to compensate for the difference between the actual viewport and the viewport forecast during rendering – is performed by means of a “flat” reprojection. This is one of the main visual impairments generated by system latency, and depth maps can instead allow for so-called “space warping”, which performs a more realistic reprojection with parallax Availability of depth maps is also useful with eye tracking and upcoming varifocal headsets, to know on the local device what is the depth of the AR/VR object watched by the user and adjust accordingly the display focal length to avoid the vergence accommodation conflict (i.e., a major step towards visual realism in VR, and pretty much a necessity with AR). Last but not least, for many use cases depth maps also allow to reduce the frame rate rendered and streamed to the XR device, thanks to the possibility of more realistic temporal upscaling via space warping before display (e.g., rendering and streaming at 60 fps instead of 90 fps, and then displaying at 120 fps via depth-based reprojected interpolations). The fast software-based coding tools of LCEVC (performed via 16-bit accelerated pipelines) enable effective delivery of depth maps along with the video, whenever sufficient resources and/or bandwidth are available. Along with making video + 14-bit depth practical from a processing standpoint, the overall bandwidth reduction enabled by LCEVC is also critical to allowing depth map transmission within the available bandwidth constraints.

Importantly, the above benefits can be achieved while keeping average system latency to a minimum. Since LCEVC natively splits the computation in separate independent processes (similarly to a sort of “vertical striping”) without compromising compression efficiency, it is possible to structure the end-2-end pipeline for maximum parallel execution, as illustrated in the conceptual diagram below:

Suitable ultra-low-latency implementation of LCEVC-enhanced casting can thus produce an ideal combination of visual quality enhancement, minimum average latency and reduction of latency jitter, making the case for split computing and wireless XR streaming at suitable qualities. That would be an example of “making the future of digital come alive”, enabling creatives and digital businesses alike to design a whole new world of impactful experiences and monetizable services.

LCEVC also provides relevant benefits when used in the context of MPEG Immersive Video (“MIV”), a collection of new video-codec agnostic standards to represent immersive media by creatively leveraging on video coding. In that case, LCEVC is used to simultaneously enhance both the compression and the processing efficiency of either HEVC or VVC for geometry information (depth, where once again LCEVC also allows to encode at 14-bit rather than 10-bit) and atlases of attribute information such as texture and transparency.

Further elaborating on the multi-layer and low-complexity coding toolkit of LCEVC, SMPTE VC-6 (SMPTE ST 2117-1) is another coding standard particularly suited for Metaverse datasets, thanks to its recursive multi-layer scheme, its use of S-trees and its extensible coding toolkit, which uniquely includes massively parallel entropy coding and optional use of in-loop neural networks.

Aside for its applications in image/texture compression, professional video workflows and AI media indexing acceleration, a variant of VC-6 for point cloud compression was used to compress the PresenZ volumetric video format, the only media format capable to render photorealistic 6DoF volumetric experiences. As already mentioned, an example experience is available in the Steam Store, and was recently awarded with a Lumiere Award. Uniquely, the codec didn’t just compress the huge data set (50+ Gigabps) to make it deliverable to end users, but it also demonstrated extra-fast software decompression, by decoding it in real-time while leaving enough free processing resources for high-resolution high-framerate real-time rendering.

Low-complexity multi-layer coding is already enabling the Metaverse in practice: it is important to notice that it makes sense also in theory.

After all, in the Metaverse, 3D-object decompression, graphics rendering and viewport compression are all going to be closely linked, creating a need for hierarchical data structures like those already used (without much compression) in today’s graphics engines: think of it like a mipmap or a mesh where the higher quality texture or polygon model can be obtained from the lower quality version, adding some data that specify details. As you get closer to an object, you fetch more data. You don’t always need full detail for everything in memory (or in a computing node), exactly like you don’t need the full map of New York when you’re zooming in on Downtown Manhattan in Google Maps. Further, if decoding is extra-fast and massively parallel, you can operate directly on compressed rather than uncompressed data, maximizing the level of realism that can be handled with a given memory bandwidth.

On top of these benefits, hierarchical multi-layer signal formats also assist in the world of AI-based indexers, making them more accurate and faster in classification tasks, which is of great benefit to metaverse bots. Lower quality signal renditions allow to rapidly detect the areas that require further classification, and region-of-interest high-resolution decoding can be completed only for the areas that are of particular interest, with multiple classifiers operating differently and in parallel on a same compressed data set, possibly distributed across multiple nodes.

Data types will increase in the metaverse: specific applications will require their own mix of point clouds, meshes, light fields, ancillary data for physics engines, haptic data, textures and augmented video. They will require forms of progressive and region-of-interest partial decoding. They may have to work on data with variable bit depth, sometimes higher than 10 bit (e.g., for depth maps, or point cloud coordinates).

Low-complexity multi-layered coding, able to efficiently execute in software on general purpose parallel hardware is the way to make it happen at scale, interoperably with a variety of devices with different display screens and processing power. Flexibility is crucial to ensure these coding schemes can run both as ASICs/FPGAs with very low gate count and as software on general-purpose graphics hardware at low processing power consumption.

Such design criteria are core to multi-layered coding standards MPEG-5 LCEVC and SMPTE VC-6, while the video-based MPEG Immersive Video (MIV) standards and volumetric data compression projects within MPEG will also provide synergistic tools to address some of these issues. Suitably leveraging on these latest coding standards within a split-computing and hybrid rendering framework can make high-quality XR feasible and interoperable.

Shipping better devices, placing fiber cables down, rolling out 5G and putting up new data centers is also a must, but by itself it cannot solve the issue. All available tricks will have to be used.

More quality with less energy

Sustainability is also a consideration. In a nutshell, we need compelling, disruption-free, XR workflows and energy bills kept to a minimum.

It’s very difficult to provide reliable numbers, and laudable initiatives like Greening of Streaming (which we support) are springing up to provide more clarity on what to measure and how to measure it. That said, the environmental impact of media delivery is certainly relevant and fast-growing. Around 2% of global greenhouse gas emissions come from data centers, and they use approximately 200 terawatt-hours of electricity, which is equivalent to the global airline industry. When this energy consumption is taken and added to the remaining delivery elements of video streaming, plus we account for 50% growth rate per year in usage, plus remembering that Moore’s law is no longer going to help much in terms of power consumption per unit of processing, plus adding the Metaverse to the mix… that number is poised to soon become a double-digit percentage of total global energy consumption.

Since XR rendering and video casting are necessarily 1:1 operations, power usage will grow linearly with users, differently from broadcasting and video streaming. Hybrid rendering, bandwidth efficiency and low-processing approaches are going to be critical to the overall sustainability of the Metaverse, adding one more brick in building the case for low-complexity multi-layered coding.

Making the Metaverse a reality

The ever-expanding volume of diverse 3D data and the tight video streaming constraints of split computing are key challenges to address to ensure that immersive worlds can be delivered to end users interoperably and at scale.

As highlighted above, rapidly adopting available multi-layer coding standards as well as developing new ones from the same IP/toolkits can materially accelerate the development of high quality and interoperable Metaverse destinations.

In five to ten years, we can safely expect any Internet destination and software application to have some XR functionality, so as to be considered part of “the Metaverse” as much as today we consider every site and app as part of “the Web”.

I’m much more interested in what is going to happen in the next three years, and apparently many Big Tech companies are investing tens of billions of dollars to suitably position themselves within this new tech Gold Rush. Like we have seen with the Web, as some titans aptly surf the new tidal wave, others will falter, and new ones may grow.

Ability to suitably tackle the data volume challenges, promptly leveraging on the approaches and standards that are available, will be one of the key factors driving the speed with which the XR meta-universe is brought to the masses, as well as which tech titans will end up on top of the hill.